The human brain is a very unique thing. The plasticity of the brain alone allows for certain unused sections of this organ to be reallocated for other purposes. This is why a blind person tends to have "heightened senses". As an educator, I'm often asked why it's so important for an audio engineer to understand sound theory and psychoacoustics. My answer is always the same — "As an audio engineer you are constantly mindf*cking the listener into feeling certain emotions."

The technical aspect of things is very important, but it's the science behind the art that gets us to our end goal. A visual artist has various tools at his or her disposal. It's important to know the difference between paintbrushes or when to use charcoal instead of acrylic. However, it's just as important to understand the way that color is just light hitting the eye and that our brain prefers certain color schemes over others. This type of thought process should be readily available in the audio realm. We are artists. To maximize our skills in this art form, we must understand the science behind this art.

It all starts with the perception of sound. Sound waves are longitudinal wave motions of air particles slapping against the tympanic membrane, or eardrum. This acoustical energy is transferred into mechanical energy by the tiny little bones in your middle ear known as the ossicles. The last of the bones pushes against the oval window and moves the fluid contained in the cochlea in the inner ear. This excitation of fluid in turn moves tiny little hair cells that fire neurons off to the brain. This whole process is known as transduction — turning one form of energy into another — and is very similar to what a microphone does. A mic placed in front of an object will convert the acoustical energy created in the space into mechanical energy through movement of the diaphragm and then into electrical energy in the form of voltages.

Anyone who's ever used a microphone understands that the act of transduction is never perfect. Think of how many different mics have been moved different places trying to dial in that "sweet spot" in front of any guitar cabinet. Well, the brain and its perception of sound are just like that. The lone fact that the brain is a muscle and the ear system is completely organic means that everyone is going to hear sound slightly differently. As audio engineers, we must be aware of the traits and nature of our own brain and the brains of the listeners. To make a long story short, your brain is not that good at hearing and it's constantly lying to you. It's inept at accurately gathering information from a sound source, and we have accepted this over thousands and thousands of years as "listening". In fact, that's what makes

listening to music so pleasurable. Human hearing is described as binaural hearing. This means that we collect and gather sound information using two sources — a left ear and a right ear. This is what leads to the localization of sound. Each ear evolved as part of a system to help a human hear the nearest water source and from which direction a saber- toothed tiger is attacking. Things like interaural arrival time difference and interaural intensity difference describe how the ear takes a single sound source collected by both ears and determines its location in a horizontal field. Both ears will pick up a sound that is off to the left, but not equally. At the left ear the sound will be more intense and arrive earlier. This is the basis of stereo imaging in a modern day control room. You can test this out yourself. Sit dead center in front of a pair of speakers. Now pan a signal mono on your console so that the signal is sent out to both speakers equally. The sound appears to be coming from dead center, right in front of you. Although there is no center speaker, our brain thinks that the sound is coming from directly in front of us because the arrival time and intensity from each speaker is nearly identical. See, I told you. Your brain is no good at this listening thing... it's lying to you!

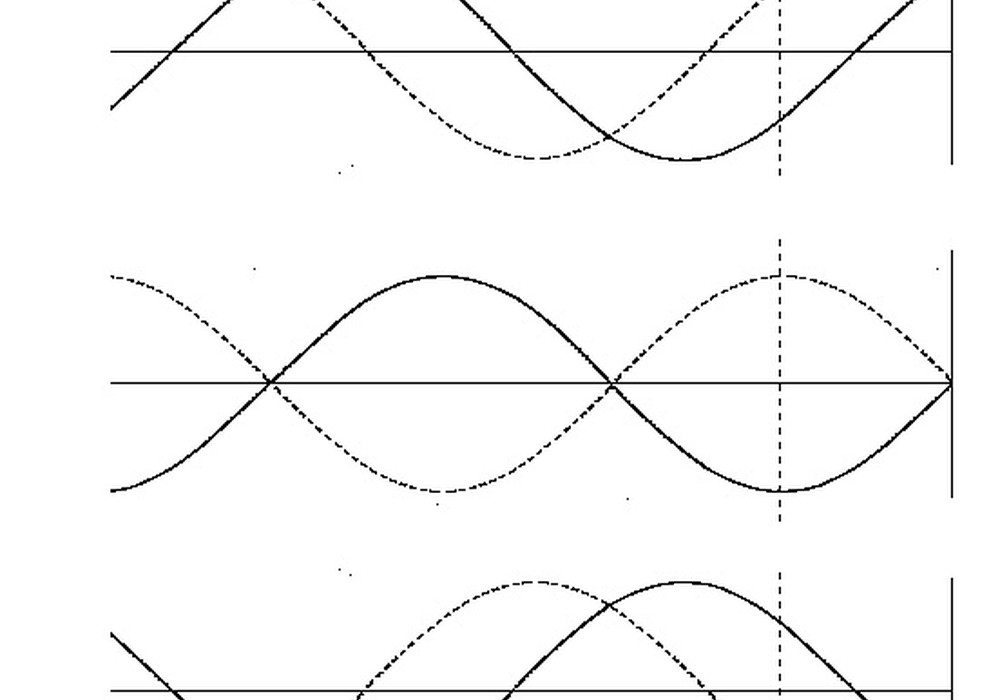

And it goes deeper than this. The auditory canal is the section of the outer ear that runs from the pinna to the eardrum. This is a pipe closed on one end that runs an average length of 2.7 cm. Everything in nature resonates, and the ear canal does so at a frequency between 1 and 4 kHz. Interestingly, this is the same predominant frequency range found in human speech. This means that our ears evolved to be most sensitive to the sound of a crying baby, a loved one or a person in danger. A couple of scientists actually proved this with what's known as the Fletcher-Munson curve. A study was done where a large number of folks had to put on headphones and match the "loudness" of two pure, whole tones. Each time the listener was given 1 kHz and an accompanying tone. The other tone was turned up in level until the listener agreed that it was the same level of "loudness" as the original 1 kHz. Not only does human hearing get weaker as we approach the outer bands (around 20 Hz and 16 kHz), but the curve becomes more linear at a higher decibel. All frequencies sound more even when we turn everything way up!

Audio engineers seem to forget that the human ear is a sensitive instrument with a threshold of hearing, a threshold of pain and a built-in protection circuit. When the human ear picks up sounds above 95 dB SPL, something called the auditory reflex begins to kick in. This is where your brain tells the tympanic muscle to tighten the eardrum and move the last ossicle (the stapes) away from the oval window. Much like tightening up a head on a snare drum raises the pitch, tightening the eardrum can cause the brain to perceive sound incorrectly. When mixing at 120 dB SPL (about the level of a loud dance club), don't mix for longer than 7-8 minutes before taking a break. Otherwise, what you're hearing is mutated in pitch and loudness, because the brain has to protect the ear.

The brain is also quite lousy at detecting discrete reflections. A direct sound source will hit the ears, followed closely by a number of reflections as the sound bounces off surfaces. The human brain is very slow at interpreting these sounds, and thus places them in a category as a single sound. The Haas effect states that multiple sounds arriving at the ear within 5 to 35 milliseconds of each other will combine in our brain as a single, uniformed sound. The reflections off of numerous surfaces arrive at the ears within this range of time, and the listener ends up with a blur of reflections referred to as reverb. This is what defines "space". It's these very reflections that determine the depth of a mix from front to back. Instruments with a tad bit more reverb will tend to sit back behind a mix and sound a bit more distant. Pre- delay is the time between when your ear receives the direct sound source and the first early reflection off a surface. If an instrument needs to sit up front in a mix, but still have lush reverb, increase the pre-delay. Because the direct sound source will arrive much sooner than the first stage of reflections, it will sound as if the listener is sitting right next to an instrument, but in a very large room. You want drums that are right up front in a mix, smacking you in the face? Record your drum set on a rooftop or in an open field where there are no reflections. The Haas effect is also good for widening the area that an instrument takes up in a stereo spread. Taking a mono piano sound panned between right and center, duplicating the track, applying a delay of 5-15 ms and panning that track hard right will cause the piano to feel as if it's taking up the whole right side of a mix.

You want to know another interesting fact? Recently, neurologists have been doing a vast amount of research on the impact of music on the brain. One of the things that still stumbles these brilliant minds is the human's perception of pitch. It remains a mystery how the human brain actually classifies pitch. In Daniel Levitin's [issue 74] excellent book, This Is Your Brain on Music, he describes the phenomenon of how everyone can sing the melody of the song "Happy Birthday to You" correctly, but they always start off in the wrong key. How is that possible? Like I said, your brain kind of sucks at this. Classification of one pitch in comparison to another is easy, but finding a pitch from a cold start with no reference is difficult for the majority of people. Not only that, but the exact pitch that the brain hears changes as level is increased. When the level of an instrument is turned up higher and higher, the brain perceives the higher frequencies as going up in pitch and the lower frequencies as dropping in pitch. This is the main reason to never let a musician tune-up in the headphones. Traditionally, musicians like headphone levels to be relatively loud, and the cranking of a headphone amp will lead to incorrect pitch perception. Before you know it, every instrument in the room is out of tune. Likewise, a vocalist can end up sounding flat or sharp during the recording process. Their headphones are usually so loud that their brain hears a slightly off-key pitch. Keep the headphones low and tune-up in the control room because your brain does not like you.

But pitch can also be one of your brain's allies. Much like a visual artist has color wheels, audio engineers have references for pitches that go well together. If you're found with a pop mix that has chord changes from C major to A minor and back, try this simple trick — find a pitch that is relevant throughout the track (in this case the key 'C') and EQ everything with regard to that pitch's harmonics. Harmonics work on a 2:1 ratio, and a root fundamental of 'C' is also the frequency 262 Hz. Your brain will thank you when you start rolling off and slightly boosting at 262 Hz, 524 Hz, 1048 Hz, 2096 Hz and so on. Your brain can easily understand those combinations of frequencies because they are all multiple variations of the same initial wavelength across the inner ear.

The brain is entirely complex, but also wrapped up in a multitude of activities. It's sometimes very difficult for the average listener to enjoy complex, interwoven music that relies on a heavy understanding of the theory of music. We can use this to our advantage. The average listener enjoys raw, simple music that appeals to our emotions and not our math skills. We can create emotions by understanding the way the brain perceives space, loudness, pitch and identical signals from multiple places. We can pull people to the darkest depths of their minds by reminding them how empty and hollow a large recording room can be. We can force tailbones to wiggle by pumping the ear with in-your-face drum sounds that pound at the skull. We can cuddle the subconscious with the pleasing, soothing, odd-order harmonics of natural distortion, square-waving us hello.

Don't get me wrong, though. I'm completely fascinated by the human brain. It remains one of the most mysterious aspects of the human species and it's what separates us from all other living things. But I've illustrated the importance of understanding the "how" and the "why" of what an audio engineer does. Our talent lies partially in our skill to manipulate that art form, in our willingness to understand the nature of human hearing. We can twist knobs until it sounds like rock 'n' roll, but armed with the knowledge we can figure out how to do this again and again and again.

Paul D'Errico records/mixes out of Miami, FL where he also holds a spot as the Senior Lecturer for SAE Institute of Miami. paulde@sae.edu