I interviewed [Eventide co-founder] Richard Factor [Tape Op #130] in 2018, but when we first met, ages ago, you were doing a rack mounted plug-in with Joe Waltz.

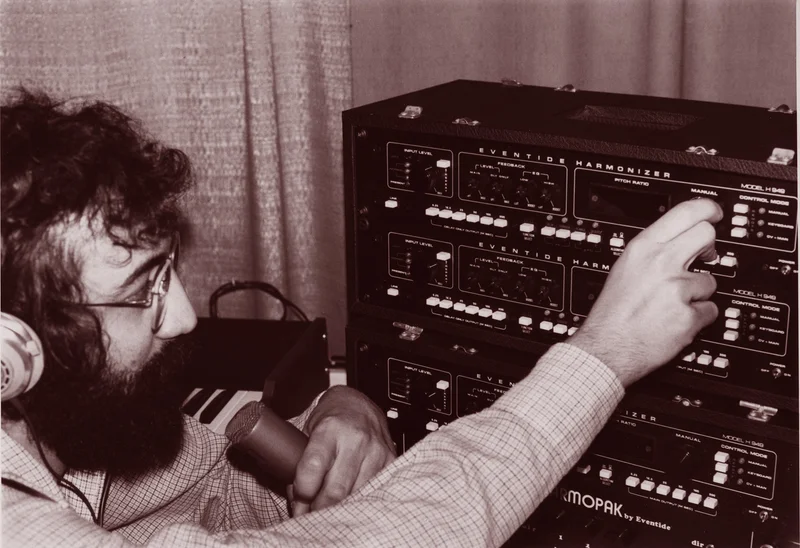

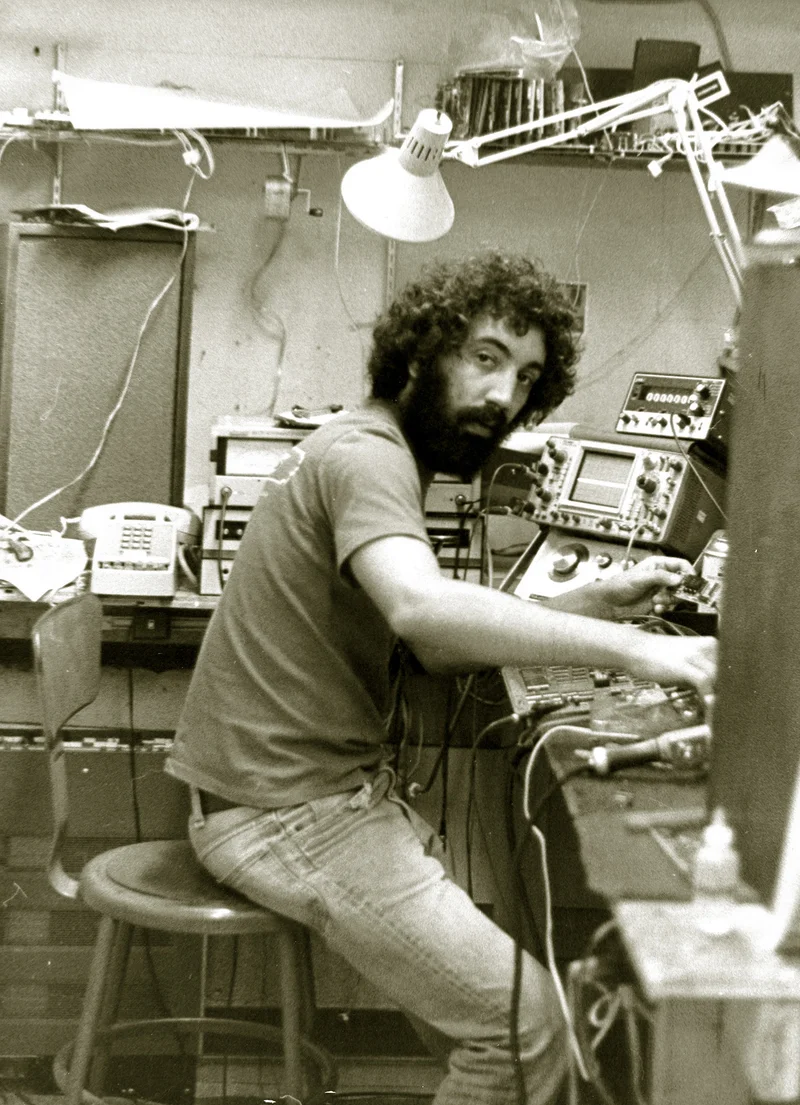

That’s Manifold Labs and Plugzilla. But “ages ago” fits me. I started with Eventide in ‘73.

With Eventide being one of the early makers of digital audio hardware, did you ever imagine that it would all just be in a computer?

Sure. Yeah.

Once the H910 Harmonizer came out, you were basically writing code.

To my mind, all of this is obvious. So, when computers came out, it was clear that everything someday could be in the computer. In fact, one of the first products we made was a real time one-third octave spectrum analyzer for the [Commodore] PET computer, IBM PCs, and for [Apple] Macs. You could plug a card into the computer and the computer became a test instrument. No one had done that before. And then when DSP [Digital Signal Processing] chips happened, I founded a company called Ariel and we were putting DSP cards into PCs so people could write software for them. But all of this was foretold. When I was in graduate school at CUNY, I was reading Bell Labs' journals [Bell Labs Technical Journal] and guys like [Manfred] Schroeder were saying that once you're in ones and zeros [digitized], you can do all manner of stuff. It was just a matter of the technology advancing the point where we could do things in real time at audio rate. Computers existed, but the challenge was for full spectrum audio. For 20 kHz, you want to sample at least at 50 kHz. That means every 20 microseconds, you've got a slot to do something. When Richard [Factor] designed the first shift register-based delay line, nothing was happening in the digital world. All you could do at that rate was move the bits along the chain. When I designed the first Harmonizer, RAM [Random Access Memory] had just come out, with 4 kilobytes of RAM. And all I could do at a 50 kHz or so audio sampling rate, was come up with one address to write and another address to read – two operations every sample period. When we got to the late '70s, we were still not at the point where a general purpose CPU [Central Processing Unit] could do anything at audio rate, but there were special purpose processors. There were these things called bit slice [processors]. But it came to a point where we could do about a hundred things every sample rate. And that's the point where you saw the first digital reverbs. If you have a hundred operations, real time at audio rate, you can build a bunch of delays and feedback around them – feedback networks – and simulate what a room sounds like.

Right. All the acoustics of a room.

We call that algorithmic reverb. Eventide and I did it with the SP 2016 [Signal Processor]. With the 2016 I went a little nuts, because I didn't want to just do reverb. I figured that I could do a hundred things. I could do band delays. I could do a vocoder. It was more of a general purpose processor. EMT did a digital reverb [EMT 250], the Lexicon 224, Quantec [Room Simulator] – all of those were algorithmic reverbs.

Right. They're not sampling spaces.

They're not modeling a given space; they're just giving you the sense of a real room. And what did that turn out to mean? If you built a Schroeder reverb, it doesn't sound like a room. Even if you get the early reflections right and have an FIR [Finite Impulse Response filter] for the early reflections, it still doesn't sound like a real room because he just had a bunch of delays with feedback in parallel. The echo density builds up linearly. But what happens in the real room is the echo density builds up exponentially. The way to make that happen in an algorithmic reverb is you want to interconnect delays. Today that's called an FDN [Feedback Delay Network]. I built it for the 2016, before it was called a feedback delay network, but that's what it was. I thought it was a matrix.

Sure. Technically it is.

So, now we get to the 1990s, and suddenly you could do a thousand things every sample period. Now you could excite a room, capture an impulse response of the room, and do convolution reverbs. But the reality is that we knew in the ‘60s, maybe even in the ‘50s, that you could measure a room, but there was never the processing power to allow us to implement convolution in real time. When convolution happened, I went, “Yeah, no surprise.” Was I interested? Not at all. Because with convolution reverb, you measure the room from here to here but that's not really the real room. Okay, it's a room; what can I do with that? I could EQ it, but I was not interested. I was waiting for the day that, at audio rate, I could do tens of thousands of things per sample. There's another way to model the room, something that was written about in the literature years ago, and it's called modal. A room has modes; a room treats every frequency differently.

Oh yeah. In different places and ways all around the room.

Now, instead of having the static impulse response, you now have thousands of modes. If you can control each of those modes, you can control its amplitude, its level, and its center frequency for each of those modes. Here's one of the keys: If you can control each mode's decay differently, and you can control the phase or the delay between each of those modes, you have this wonderful, magical world that you can f#$% with sound in ways never before possible. I've been waiting for that. I can tell you that that's what we're doing. Why was this interesting to me? I play guitar, I've studied cello, now I’m downsizing and playing ukulele and I'm still in a band. When I was studying cello in the mid ‘80s, my cello teacher, James Hoffman in Brooklyn, introduced me to a Russian immigrant named Isaak Vigdorchik. Isaak was brilliant: a luthier and played violin in the symphony orchestra, but he was also a scientist. He had emigrated from the Soviet Union, but in grad school he got his hands on a Cremona violin, and he told me that if he tapped the front or back plate up and down the violin he could hear the notes of the Western musical scale. It was his contention that the makers either lacquered or sanded the wood so it would ring out at the notes of scale. He wrote a book, The Acoustical Systems of Violins of Stradivarius and Other Cremona Makers. I said, “Isaak, I don't believe you.” At the time, I had just designed the world's first audio rate FFT [Fast Fourier Transform] analyzer. Isaak was connected with someone at a museum; we were going to work together, get our hands on a violin, and see if what he was claiming was true – get a Stradivarius or one of those violins. He would say, “Anthony, the notes are in the wood.” That made me think that if I had a modal reverb I could put the notes in the room. I could modify the room so the room would ring out at the notes I wanted – or not ring out at certain notes. Back in the day, when people had echo chambers or a bad sounding room that would ring out, you'd want to damp it to get that ringing down. I'm putting the ringing back in!

I've got an EMT 140 plate reverb, and I EQ going in because of what rings out and might become too prevalent.

So, imagine if you had the ability to modify the room any way you want in that way. We'd been set to do this testing, but sadly in 1987, Isaak dropped dead of a heart attack. Ever since then, that's been in my head. If we could ever have enough processing power to represent rooms in this modal dimension, all kinds of fun would be possible. About ten years ago, I got a resume for someone applying to an internship from Stanford University, named Woody Herman. In his resume was a mention that he had worked on a modal project. I brought him in as an intern, and he’s fantastic. He joined Eventide and has been with us since. In 2020 he worked with me – I'm useless, he did the work – creating the first modal reverb designed to either emphasize or de-emphasize the notes of the Western musical scale. We gave it to Kevin Killen [Tape Op #67], and he loved it. For the last two years we've been working on what I think is going to be an interesting product. I know it does what nothing else does. How useful it is, we'll find out. I can never guess that!

Do you think of it more as a creative tool? Is it creating new textures.

There's a lot to this. You can tell it to emphasize certain notes. In fact, you can target whether that emphasis is happening early on or later. One use we discovered, that wasn't obvious, is if you're playing a lead on guitar in the key of C, take those notes and tell it not to ring out early, so that your lead cuts through and then blooms. You can't do that with any other reverb. We had to come up with a name for it. Every name is used. It's Western scale right now – we can add other scales later – so, it's either well-tempered or ill-tempered, and it's an ambience, so we’re calling it Temperance.

I was looking at the name earlier, and I was like, “Why Temperance?” Tempered. Of course.

It makes sense to you. I don't know if it makes sense to anyone else.

It makes sense based on how we have to temper the scale to make it work. Every time my piano gets tuned I hear about this.

Yeah. It took us a while, because while a modal representation of rooms was known in the literature and there are plenty of papers on it, but it turns out the implementation took some discovery. We have filed some patents that are not at all obvious but make it work brilliantly. We're dealing with 5,000 resonators, and each of them has the four parameters. It's 20,000 parameters.

And a lot of processing.

You're turning a knob and that knob is changing 20,000 things. This has been a dream for me. It's a new playground. The people who’ve played with it are making really weird sounds. You'll see, in the pro version of this [Temperance pro], you can dial back what we call density, which instead of using this vast array of resonators uses fewer of them. Then you get what I would consider "awful sounds," but I'm sure people will use them because nothing else sounds like that!

Something that suggests a whole new tonality.

That's what I've lived for. I've never been able to predict how people will use the stuff that I've been involved in. They asked me to write a quote for the press release and I said, “Well, I can't promise you that you'll find this useful, but I can promise you it'll do stuff to sound that nothing else does.” You can take an algorithmic reverb and create a modal model of it, or an IR reverb and create a modal model. But you can also start from scratch and make your own modal model of a room that never existed before. The free version [Temperance Lite] is going to have three spaces. It's going to have the modal model of the algorithmic SP 2016, a model of one of Ralph Kessler's best IR reverbs – because he's an expert, and it's going to have a space that no one's ever heard before, because it's a synthesized room in this modal space. You can turn this big knob, and suddenly any of those reverbs starts falling in love with notes or just shunning notes.

How many controls are available on the main panel?

There are basically four parameters that are involved for the tempering, This big knob [Temper] is at noon, which is off. If you're just at noon, It's just a modal reverb with normal reverb parameters. Turn to the right – love notes, turn left – hate notes. It will emphasize and de-emphasize the notes you choose. You have to select the notes you're going to mess with. Then there's a note width parameter [Size]. Do we want the reverb to consider an A 432 to 448 Hz? How wide? If you make the note real wide, you're going to hear more of that note. If you make it narrow, less of that note. Do you want this to happen to the beginning of the reverb, or do you only want that to happen at the end? We're calling that parameter Target. It also has the parameter called Density, where you're now breaking the space up into pieces and it gets really weird. You'll also find an Offset control, where you're taking what's coming in and shifting all of these modes back and forth. The fourth parameter is a tempering slider, where you can pick just the low notes, just the high notes, or all of the notes of the scale. There are also some parameters that are common to all reverbs, like Size, Decay, and Mix. I wonder if we’re doomed! No one's going to get any of this. No one has patience for it.

As long as it does something whiz-bang right when you start it, then people are going to be curious.

Yeah, I hope. There are presets that you might go, “Oh, man, that's weird. I'll never use that.” The reason we're doing the free version is because I don't know how else to get people's attention. It's not just Eventide. Now that this is possible, other companies in the plug-in space are going to be doing modal reverbs. Our take is this musical approach, and that's how we're marketing it, as "the world's first musical reverb." <eventideaudio.com> ![]()